Top 15 Call Center KPI Benchmarks for 2026

BlueHub unifies every conversation, customer record, and automation into one powerful platform.

Explore more

Use call center KPI benchmarks to set realistic targets, identify performance gaps, and guide investment decisions. In 2026, most call centers and contact centers should track service level, average speed to answer, abandonment rate, first call resolution, customer satisfaction, customer effort score, average handle time, after-call work, transfer rate, repeat calls, occupancy and utilization, schedule adherence, forecast accuracy, cost per contact, and self-service containment. Treat every number as a range by channel and intent, not a single magic target. Pair call center metrics with root-cause narratives and a clear action plan to improve customer satisfaction, operational efficiency, and team performance.

By 2027, service leaders expect AI to resolve half of all cases, up from roughly a third in 2025, indicating that KPI baselines for speed, effort, and resolution are shifting. At the same time, customers are 2.6× more likely to buy more when wait times are satisfactory and 2.1× more likely to recommend after first-call resolution, underscoring why benchmarks for ASA, SL, and FCR directly map to revenue.

Benchmarking is not about copying the industry average. It is about locating your current state on a realistic scale, then running a repeatable cadence to close the gap. You will track performance data, compare against external reference points and direct competitors where available, and tune targets by channel, intent, and value. The outcome is a common language for call center performance, faster decision-making, and fewer arguments about “what good looks like.”

In the rest of this article, you’ll get pragmatic call center KPI benchmarks, how to tailor them by channel and intent, and a simple cadence for comparing your performance against industry standards without copying the average.

Treat benchmarks as a playbook, not a scoreboard. Start by anchoring each KPI to a clear decision and the moment in the customer journey it affects, then compare performance in a way that reflects how your center actually operates. With that lens, use the guidance below to set targets that are fair, directional, and immediately actionable.

Publish context with every metric. Center performance metrics only matter when leaders know what changed and what will change next. Write one line on the cause, one line on the fix, and one line on the expected impact.

Below are practical call center KPI benchmarks expressed as typical target ranges, not universal rules. Calibrate to your vertical, value segments, and customer journey stage. When in doubt, measure a 90-day baseline, then set targets one notch tighter than your current median.

What it is: Percent of calls answered within a threshold.

2026 benchmark: Voice queues often sit between 75/30 and 85/20, depending on value and intent. Digital channels should publish response-time SLAs rather than a call-style speed threshold.

Why it matters: SL is your public promise. Hit this, and you cut wait-driven abandonment and poor customer service complaints.

What it is: Time from enter-queue to live answer.

2026 benchmark: 20–40 seconds for voice in mainstream queues; VIP or critical intents trend faster.

Why it matters: ASA influences emotion and primes customer interactions. Long waits spike abandonment rate and repeat calls.

What it is: The percent of incoming calls that disconnect before being answered.

2026 benchmark: 3–8% for voice after IVR/menu tuning; lower for callbacks. Measure “abandon after X seconds” to remove immediate hang-ups.

Why it matters: Abandonment is a hard cost of delay and a warning light for staffing or menu design.

What it is: Percent of issues resolved without follow-up or transfer.

2026 benchmark: 70–85% for most call centers, with technical and multi-party cases lower. Track by intent, not centerwide.

Why it matters: FCR drives customer loyalty, reduces repeat volume, and lowers contact center costs.

What it is: Post-interaction rating, typically 1–5 or 1–7.

2026 benchmark: 80–90% satisfied on resolved contacts, lower on constrained policies.

Why it matters: CSAT remains the quickest way to assess customer satisfaction at the interaction level.

What it is: “How much effort did it take to resolve your issue?”

2026 benchmark: Targets vary by scale; aim to keep “difficult” responses below 10–15% on resolved interactions.

Why it matters: CES predicts repeat calls and churn better than satisfaction alone because it captures the effort the average caller invests.

What it is: Talk + hold + after-call work (ACW).

2026 benchmark: Voice 4–7 minutes for general service; complex tech or regulated queues longer. Chat depends on concurrency and ranges from 6 to 12 minutes per conversation.

Why it matters: AHT converts contact volume into workload and staffing needs. Read it with FCR and quality in mind, so you don’t optimize for speed alone.

What it is: Wrap-up time per contact.

2026 benchmark: 30–90 seconds for mature flows; longer for heavy documentation.

Why it matters: Excess ACW hides process or center software issues and reduces agent availability for new calls.

What it is: Percent of contacts moved to another queue or tier.

2026 benchmark: 10–20% for mixed complexity; lower in one-and-done environments.

Why it matters: Transfers extend the customer journey and can signal gaps in routing, knowledge, or agent skills.

What it is: Share of customers calling back about the same issue within X days.

2026 benchmark: 10–15% for mainstream service when FCR is healthy.

Why it matters: Repeat calls inflate center performance costs and indicate friction with documentation or policy.

What it is: The time agents spend handling work versus waiting.

2026 benchmark: Voice 75–85% occupancy; lower for concurrent chat to avoid overload.

Why it matters: Keeps agents engaged without burnout and protects service quality.

What it is: A match between planned agent schedules and actual status.

2026 benchmark: 85–92% at the interval level with documented exceptions.

Why it matters: Adherence connects forecasting to reality, ensuring service-level targets remain credible.

What it is: Error between forecast and actual contacts or workload.

2026 benchmark: Day-level 5–8% for mature voice lines; 10–12% for digital.

Why it matters: Accurate forecasts reduce over-staffing and understaffing, stabilizing response time and agent satisfaction.

What it is: All-in cost divided by the number of contacts handled.

2026 benchmark: Highly variable by industry and channel; the target is a downward trend without harming experience.

Why it matters: Puts operational efficiency and service quality in one view for Finance and Operations.

What it is: Share of intents resolved by IVR, bots, or help center without agent touch.

2026 benchmark: 20–60% depending on automation maturity and intent mix.

Why it matters: High-quality self-service options reduce the number of calls answered and free agents for complex work, lifting FCR on the remaining load.

Every vertical carries different risks, regulations, and emotions. Use these call center KPI benchmarks by industry as starting ranges, then tune them by intent and value tier.

Customer context: Order status, returns, payments, promotions.

Typical targets: SL 80/20 for voice during business hours, ASA 20–30s, abandonment ≤5%, FCR 75–85%, CSAT 85–90%, CES “difficult” ≤10%.

Notes: High peaks around drops and holidays. Invest in self-service for status and returns to cut repeat calls and raise agent productivity for edge cases.

Customer context: Authentication, fraud, claims, policy changes.Typical targets: SL 80/20 or faster for high-risk lines, ASA ≤20s on priority, abandonment ≤3–5%, FCR 70–80% with strong compliance notes.Notes: Transfers may be higher due to entitlements. Tie AHT to quality; rushing increases rework and contact center costs.

Customer context: Setup, billing, troubleshooting.

Typical targets: SL 75/30 for general, VIP faster; FCR 70–85%; AHT can be 7–12 minutes for technical issues; CSAT 85–90%.

Notes: Knowledge freshness and guided workflows are the FCR lever. Track repeat calls within seven days.

Customer context: Appointments, benefits, medication questions.

Typical targets: SL 80/20 or stricter by regulation, abandonment ≤3–5%, CSAT ≥88% on resolved contacts, transfers controlled by role and privacy rules.

Notes: Compliance extends AHT and ACW; measure empathy and clarity alongside speed to assess customer happiness and service quality.

Customer context: Changes, disruptions, loyalty.

Typical targets: Highly seasonal. SL flexes by event; ASA 20–40s baseline; FCR 70–80%; abandonment ≤5–8% with virtual hold.

Notes: Proactive messaging reduces spikes. Measure multi-touch journeys rather than single calls in isolation.

Customer context: Outages, billing, activation.

Typical targets: SL 80/20 normal, surge playbooks for outages; FCR 70–85%; containment high for status updates.

Notes: Separate incident traffic from routine service to prevent benchmarks from blurring during events.

Many call centers still look at center metrics in isolation: a voice SLA here, a chat AHT there. In 2026, top programs benchmark the journey:

This lens prevents gaming and aligns targets with the customer journey instead of a siloed queue.

Think of benchmarking as a tight weekly–monthly rhythm, not a one-off project. You’re building a repeatable loop that compares like with like, turns gaps into owned actions, and shows progress over time. Use the steps below to keep the cadence clear, fair, and relentlessly actionable.

Report weekly and monthly. Show trend lines, not snapshots, and include two customer feedback quotes to humanize the data.

Benchmarking fails when shortcuts creep in. Guard against these traps so your call center KPI benchmarks stay comparable over time, reflect channel realities, and translate into owned improvements.

Here’s a simple, repeatable rollout that turns benchmarking from a spreadsheet exercise into an operating habit. Use these phases to lock definitions, set targets, and create a weekly rhythm where insights drive action.

Days 1–30: Baseline and definitions

Lock metric definitions, collect twelve months of data, and publish current ranges by channel and top ten intents. Agree on three outcome KPIs for the quarter, such as customer satisfaction score, first call resolution, and abandonment.

Days 31–60: Targets and playbooks

Set floor, target, and stretch for each KPI. Write playbooks for the top five gaps: staffing changes, knowledge fixes, self-service options, and agent training. Turn on a weekly review that shows performance data with one-line causes and one-line actions.Days 61–90: Measure and iterate

Report call center performance against the new targets, including verbatim customer feedback. Highlight two wins and two risks each week. Adjust targets where the intent mix or seasonality changed. Publish a quarterly “what changed and why” to keep contact center leaders aligned.

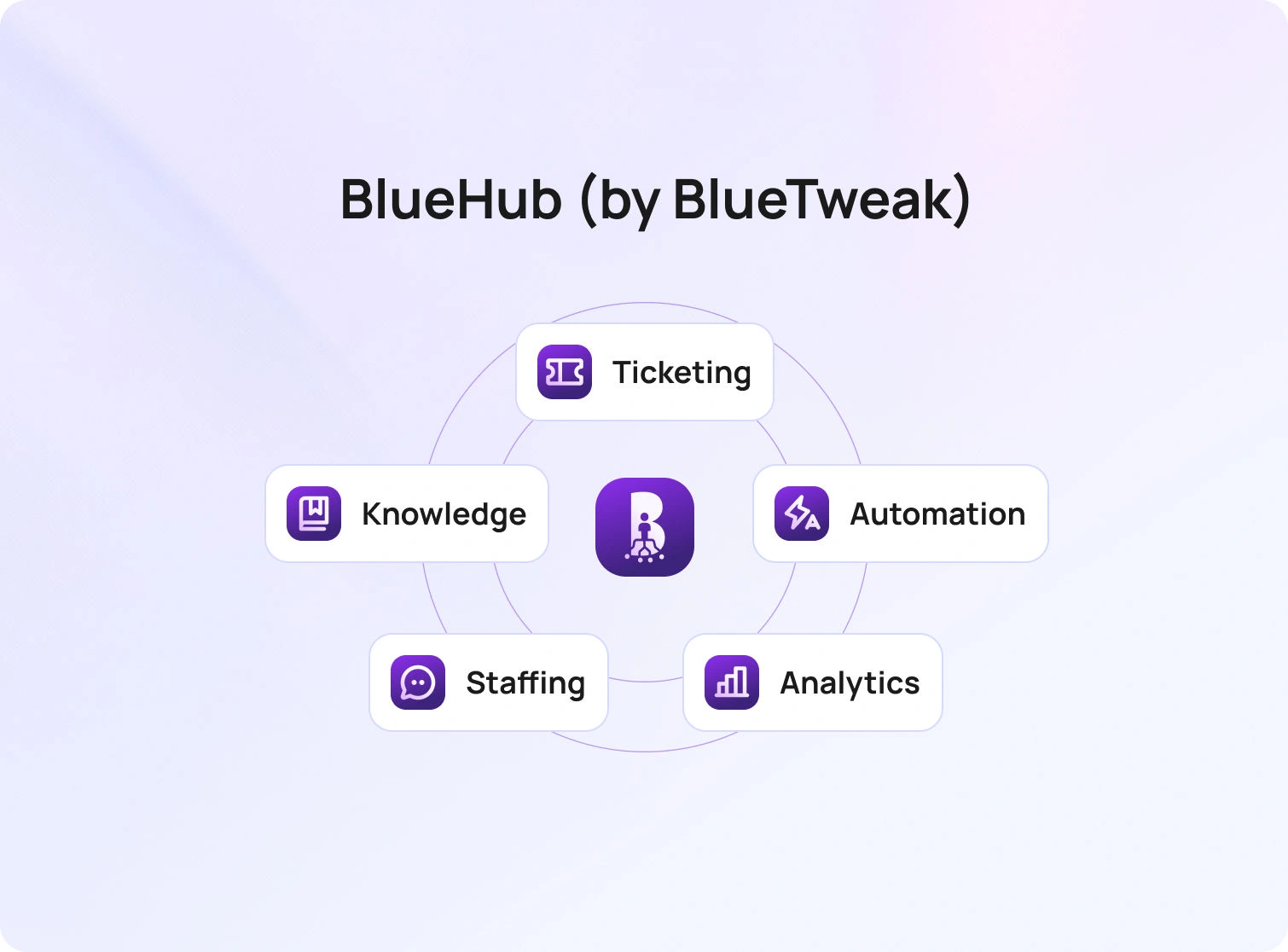

BlueHub (by BlueTweak) makes benchmarking routine. Your channels sit in one workspace, and the core KPIs populate automatically by intent and channel. That includes SL, ASA, abandonment, FCR, CSAT, CES, AHT, ACW, transfers, repeat calls, occupancy, adherence, forecast accuracy, cost per contact, and self-service containment. Leaders see trend lines against floor, target, and stretch, and can pivot from center metrics to underlying conversations in a click.

Clean joins make comparisons trustworthy. APIs and webhooks attach case IDs, intents, brand, language, and queue to every interaction, while AI summaries and sentiment tagging convert raw conversations into structured performance data. That context lets you publish center key performance indicators with one line for the cause, one for the fix, and one for the expected impact, without manual stitching.

Benchmarks become actions, not screenshots. Suggested replies cite the right knowledge article, WFM views align staffing to forecast, SL exposure, and routing rules adjust by intent when repeat calls or transfer rate spike. Teams close gaps with targeted playbooks across staffing, knowledge, and self-service, and BlueHub tracks outcomes in customer satisfaction, customer effort score, and contact center costs.

If you already own analytics or workforce management software, BlueHub integrates to keep a single source of truth. If you do not, BlueHub provides out-of-the-box scorecards, adherence, and intraday views, and intent-level reporting, so you can immediately launch a weekly and monthly benchmarking cadence and measure progress against the 2026 targets outlined in this guide.

Great benchmarking pairs numbers with a narrative. Use these call center KPI benchmarks to set intent-level targets by channel, then write short explanations and action plans that tie center KPIs to better customer interactions and lower contact center costs. When you treat benchmarks as a living contract, reviewed weekly, tuned quarterly, you will raise team performance, improve customer satisfaction, and give leaders the clarity they need to invest confidently in call center operations and customer experience.If you want those call center KPI benchmarks to update automatically and tie directly to actions, BlueHub brings routing, WFM, knowledge, and analytics into one workspace. Scorecards populate by intent and channel, leaders track trends against targets, and teams get clear next steps. See how it maps to your queues and metrics. Book a BlueHub demo.

Call center benchmarking compares your center’s performance metrics to external references and to your own history. It provides call center managers and contact center leaders with realistic ranges for key performance indicators, streamlines processes, and shows where changes will improve customer satisfaction and operational efficiency the fastest. BlueHub centralizes routing, knowledge, WFM, and analytics so those benchmarks update in one place and tie directly to actions.

Revisit quarterly for targets and weekly for progress. Many call centers adjust by season and by campaign as customer expectations and contact volume change. When product, policy, or staffing shifts occur, update the forecast, then reset ranges. BlueHub’s intent-level dashboards make these resets simpler by comparing trends across channels without manual stitching.

For most contact centers, the combination of first-call resolution, customer effort score, and abandonment accounts for the largest swings in loyalty. Keep FCR rising, keep effort low, and reduce waits that trigger poor customer service experiences.

Outbound call centers emphasize connect rate, right-party contact rate, conversion rate, AHT, compliance, and cost per successful outcome. The same discipline applies: benchmark by campaign and intent, not a single roll-up.

Accurate forecasting, fair schedules, and real-time adjustments keep service levels and response times stable while protecting agent performance and satisfaction. Workforce management helps you hit service level targets at the lowest reasonable cost and reduces human error in the scheduling process.

As Head of Digital Transformation, Radu looks over multiple departments across the company, providing visibility over what happens in product, and what are the needs of customers. With more than 8 years in the Technology era, and part of BlueTweak since the beginning, Radu shifted from a developer (addressing end-customer needs) to a more business oriented role, to have an influence and touch base with people who use the actual technology.

Quality

Administration

Workforce Management

Customer Support Analytics

Copyright BlueTweak 2025